As part of his recent PhD thesis, Agustín Martorell has studied the potential of multi-scale representations in music analysis. In particular, he focuses on the description of tonality from score representations and on the analysis of pitch-class sets. We have recently published the results of this study in Journal of Mathematics and Music: Mathematical and Computational Approaches to Music Theory, Analysis, Composition and Performance. The paper is now online!

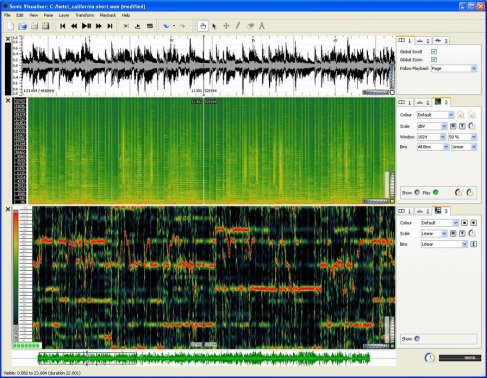

Several analyses are discussed within the paper while addressing the problem of visualization. As a result of the work, there is also a MATLAB Toolbox that you are able to download from here.

Abstract

This work presents a systematic methodology for set-class surface analysis using temporal multi-scale techniques. The method extracts the set-class content of all the possible temporal segments, addressing the representational problems derived from the massive overlapping of segments. A time versus time-scale representation, named class-scape, provides a global hierarchical overview of the class content in the piece, and it serves as a visual index for interactive inspection. Additional data structures summarize the set-class inclusion relations over time and quantify the class and subclass content in pieces or collections, helping to decide about sets of analytical interest. Case studies include the comparative subclass characterization of diatonicism in Victoria’s masses (in Ionian mode) and Bach’s preludes and fugues (in major mode), as well as the structural analysis of Webern’s Variations for piano op. 27, under different class-equivalences.